In Kenneth Grahame’s 1908 novel The Wind in the Willows, a Mole meets a Water Rat who lives on a River. The Rat explains how the River permeates his life: “It’s brother and sister to me, and aunts, and company, and food and drink, and (naturally) washing.” As the River plays many roles in the Rat’s life, so does Carnot’s theorem play many roles in a thermodynamicist’s.

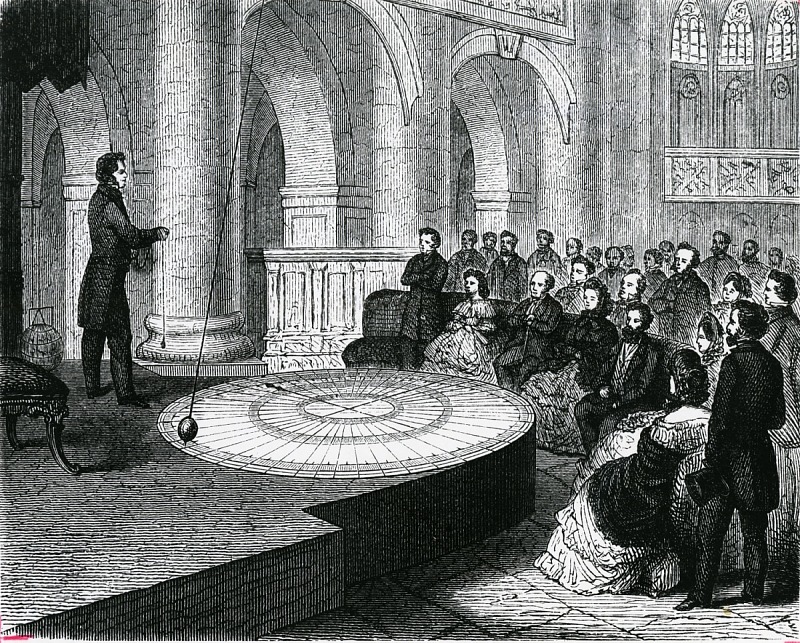

Nicolas Léonard Sadi Carnot lived in France during the turn of the 19th century. His father named him Sadi after the 13th-century Persian poet Saadi Shirazi. Said father led a colorful life himself,1 working as a mathematician, engineer, and military commander for and before the Napoleonic Empire. Sadi Carnot studied in Paris at the École Polytechnique, whose members populate a “Who’s Who” list of science and engineering.

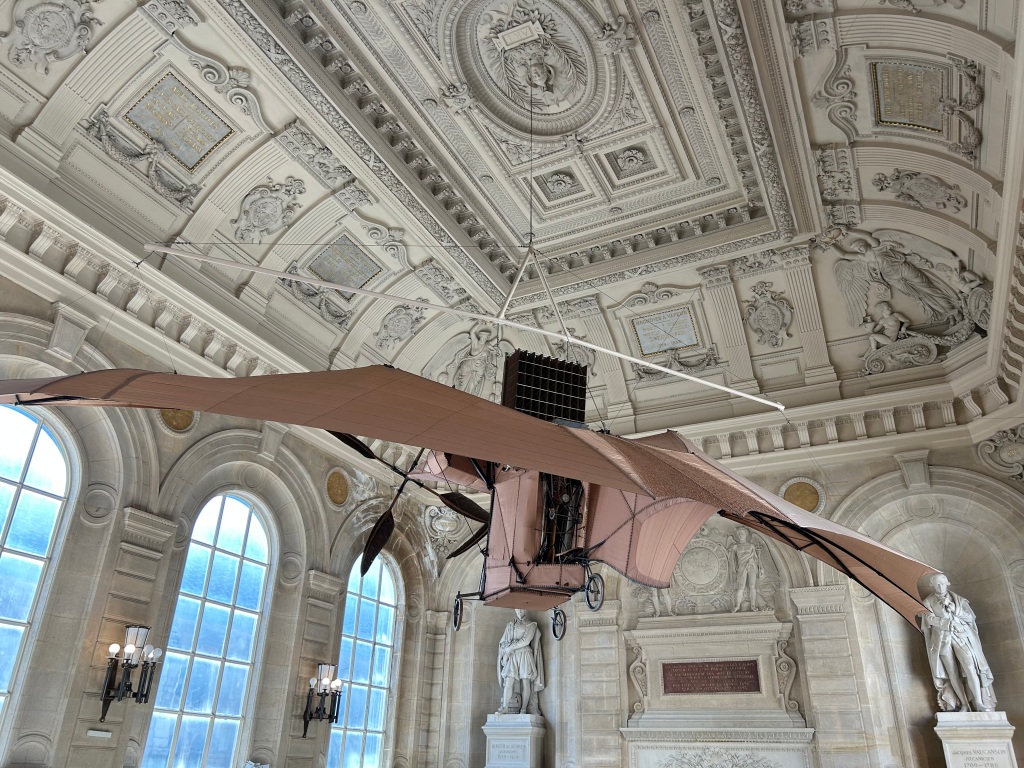

As Carnot grew up, the Industrial Revolution was humming. Steam engines were producing reliable energy on vast scales; factories were booming; and economies were transforming. France’s old enemy Britain enjoyed two advantages. One consisted of inventors: Englishmen Thomas Savery and Thomas Newcomen invented the steam engine. Scotsman James Watt then improved upon Newcomen’s design until rendering it practical. Second, northern Britain contained loads of coal that industrialists could mine to power her engines. France had less coal. So if you were a French engineer during Carnot’s lifetime, you should have cared about engines’ efficiencies—how effectively engines used fuel.2

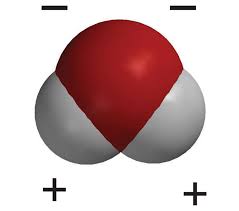

Carnot proved a fundamental limitation on engines’ efficiencies. His theorem governs engines that draw energy from heat—rather than from, say, the motional energy of water cascading down a waterfall. In Carnot’s argument, a heat engine interacts with a cold environment and a hot environment. (Many car engines fall into this category: the hot environment is burning gasoline. The cold environment is the surrounding air into which the car dumps exhaust.) Heat flows from the hot environment to the cold. The engine siphons off some heat and converts it into work. Work is coordinated, well-organized energy that one can directly harness to perform a useful task, such as turning a turbine. In contrast, heat is the disordered energy of particles shuffling about randomly. Heat engines transform random heat into coordinated work.

An engine’s efficiency is the bang we get for our buck—the upshot we gain, compared to the cost we spend. Running an engine costs the heat that flows between the environments: the more heat flows, the more the hot environment cools, so the less effectively it can serve as a hot environment in the future. An analogous statement concerns the cold environment. So a heat engine’s efficiency is the work produced, divided by the heat spent.

Carnot upper-bounded the efficiency achievable by every heat engine of the sort described above. Let denote the cold environment’s temperature; and

, the hot environment’s. The efficiency can’t exceed

. What a simple formula for such an extensive class of objects! Carnot’s theorem governs not only many car engines (Otto engines), but also the Stirling engine that competed with the steam engine, its cousin the Ericsson engine, and more.

In addition to generality and simplicity, Carnot’s bound boasts practical and fundamental significances. Capping engine efficiencies caps the output one can expect of a machine, factory, or economy. The cap also prevents engineers from wasting their time on daydreaming about more-efficient engines.

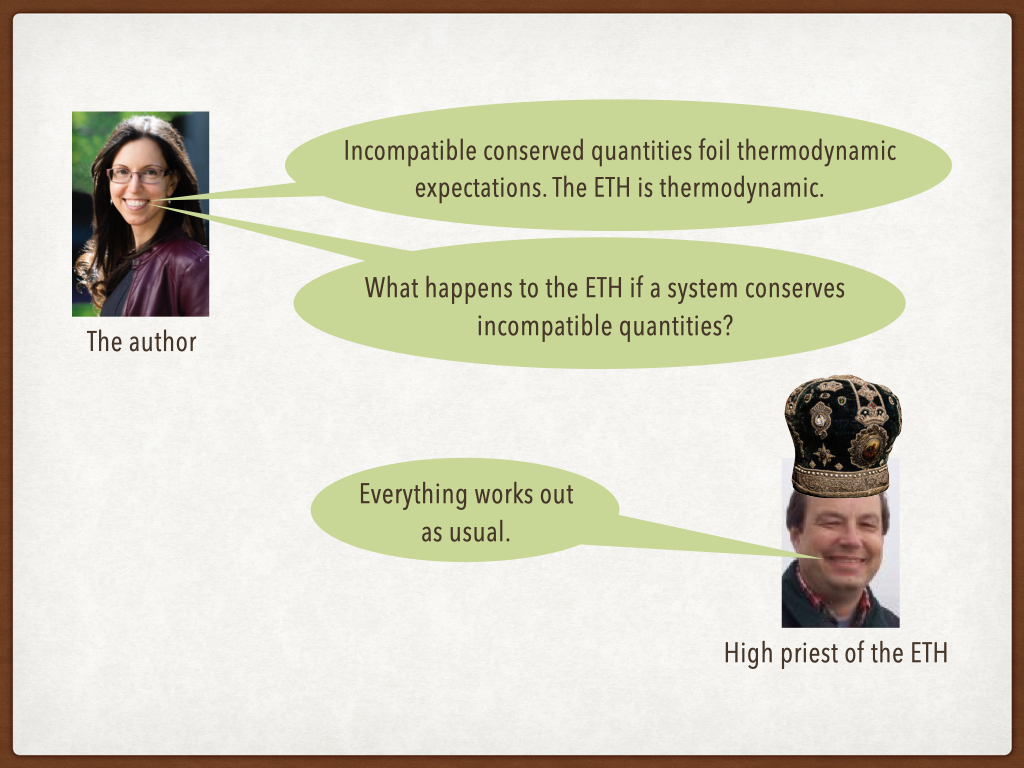

More fundamentally than these applications, Carnot’s theorem encapsulates the second law of thermodynamics. The second law helps us understand why time flows in only one direction. And what’s deeper or more foundational than time’s arrow? People often cast the second law in terms of entropy, but many equivalent formulations express the law’s contents. The formulations share a flavor often synopsized with “You can’t win.” Just as we can’t grow younger, we can’t beat Carnot’s bound on engines.

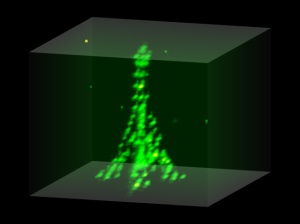

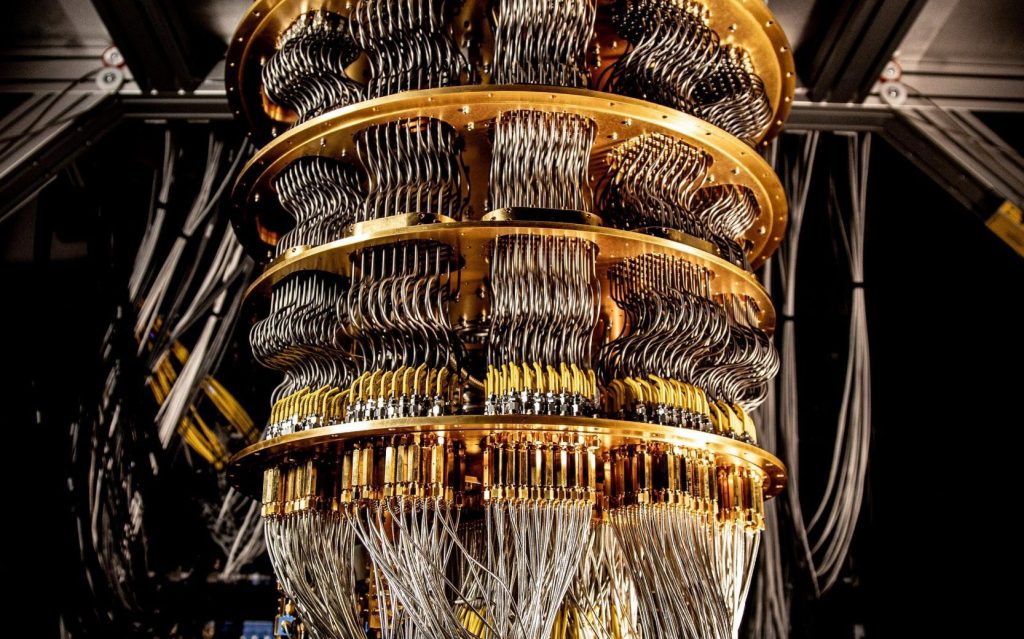

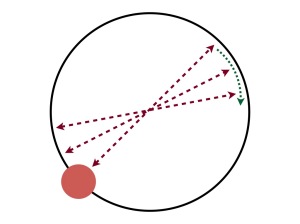

One might expect no engine to achieve the greatest efficiency imaginable: , called the Carnot efficiency. This expectation is incorrect in one way and correct in another. Carnot did design an engine that could operate at his eponymous efficiency: an eponymous engine. A Carnot engine can manifest as the thermodynamicist’s favorite physical system: a gas in a box topped by a movable piston. The gas undergoes four strokes, or steps, to perform work. The strokes form a closed cycle, returning the gas to its initial conditions.3

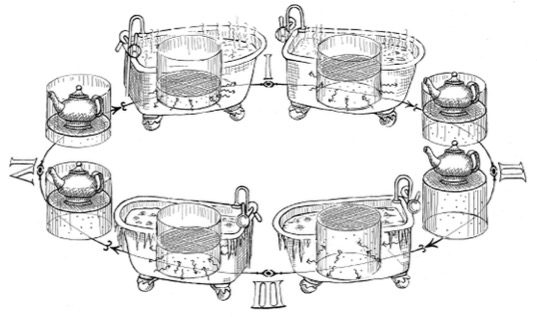

Steampunk artist Todd Cahill beautifully illustrated the Carnot cycle for my book. The gas performs useful work because a teapot sits atop the piston. Pushing the piston upward, the gas lifts the teapot. You can find a more detailed description of Carnot’s engine in Chapter 4 of the book, but I’ll recap the cycle here.

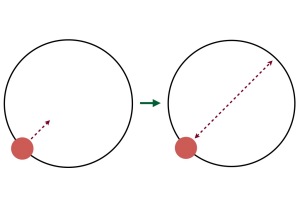

The gas expands during stroke 1, pushing the piston and so outputting work. Maintaining contact with the hot environment, the gas remains at the temperature . The gas then disconnects from the hot environment. Yet the gas continues to expand throughout stroke 2, lifting the teapot further. Forfeiting energy, the gas cools. It ends stroke 2 at the temperature

.

The gas contacts the cold environment throughout stroke 3. The piston pushes on the gas, compressing it. At the end of the stroke, the gas disconnects from the cold environment. The piston continues compressing the gas throughout stroke 4, performing more work on the gas. This work warms the gas back up to .

In summary, Carnot’s engine begins hot, performs work, cools down, has work performed on it, and warms back up. The gas performs more work on the piston than the piston performs on it. Therefore, the teapot rises (during strokes 1 and 2) more than it descends (during strokes 3 and 4).

At what cost, if the engine operates at the Carnot efficiency? The engine mustn’t waste heat. One wastes heat by roiling up the gas unnecessarily—by expanding or compressing it too quickly. The gas must stay in equilibrium, a calm, quiescent state. One can keep the gas quiescent only by running the cycle infinitely slowly. The cycle will take an infinitely long time, outputting zero power (work per unit time). So one can achieve the perfect efficiency only in principle, not in practice, and only by sacrificing power. Again, you can’t win.

Carnot’s theorem may sound like the Eeyore of physics, all negativity and depression. But I view it as a companion and backdrop as rich, for thermodynamicists, as the River is for the Water Rat. Carnot’s theorem curbs diverse technologies in practical settings. It captures the second law, a foundational principle. The Carnot cycle provides intuition, serving as a simple example on which thermodynamicists try out new ideas, such as quantum engines. Carnot’s theorem also provides what physicists call a sanity check: whenever a researcher devises a new (for example, quantum) heat engine, they can confirm that the engine obeys Carnot’s theorem, to help confirm their proposal’s accuracy. Carnot’s theorem also serves as a school exercise and a historical tipping point: the theorem initiated the development of thermodynamics, which continues to this day.

So Carnot’s theorem is practical and fundamental, pedagogical and cutting-edge—brother and sister, and aunts, and company, and food and drink. I just wouldn’t recommend trying to wash your socks in Carnot’s theorem.

1To a theoretical physicist, working as a mathematician and an engineer amounts to leading a colorful life.

2People other than Industrial Revolution–era French engineers should care, too.

3A cycle doesn’t return the hot and cold environments to their initial conditions, as explained above.