The autumn of my sophomore year of college was mildly hellish. I took the equivalent of three semester-long computer-science and physics courses, atop other classwork; co-led a public-speaking self-help group; and coordinated a celebrity visit to campus. I lived at my desk and in office hours, always declining my flatmates’ invitations to watch The West Wing.

Hard as I studied, my classmates enjoyed greater facility with the computer-science curriculum. They saw immediately how long an algorithm would run, while I hesitated and then computed the run time step by step. I felt behind. So I protested when my professor said, “You’re good at this.”

I now see that we were focusing on different facets of learning. I rued my lack of intuition. My classmates had gained intuition by exploring computer science in high school, then slow-cooking their experiences on a mental back burner. Their long-term exposure to the material provided familiarity—the ability to recognize a new problem as belonging to a class they’d seen examples of. I was cooking course material in a mental microwave set on “high,” as a semester’s worth of material was crammed into ten weeks at my college.

My professor wasn’t measuring my intuition. He only saw that I knew how to compute an algorithm’s run time. I’d learned the material required of me—more than I realized, being distracted by what I hadn’t learned that difficult autumn.

We can learn a staggering amount when pushed far from our comfort zones—and not only we humans can. So can simple collections of particles.

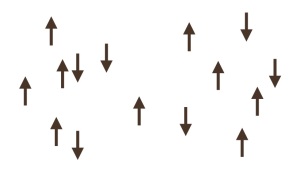

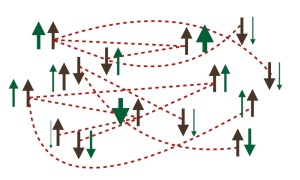

Examples include a classical spin glass. A spin glass is a collection of particles that shares some properties with a magnet. Both a magnet and a spin glass consist of tiny mini-magnets called spins. Although I’ve blogged about quantum spins before, I’ll focus on classical spins here. We can imagine a classical spin as a little arrow that points upward or downward. A bunch of spins can form a material. If the spins tend to point in the same direction, the material may be a magnet of the sort that’s sticking the faded photo of Fluffy to your fridge.

The spins may interact with each other, similarly to how electrons interact with each other. Not entirely similarly, though—electrons push each other away. In contrast, a spin may coax its neighbors into aligning or anti-aligning with it. Suppose that the interactions are random: Any given spin may force one neighbor into alignment, gently ask another neighbor to align, entreat a third neighbor to anti-align, and having nothing to say to neighbors four and five.

The spin glass can interact with the external world in two ways. First, we can stick the spins in a magnetic field, as by placing magnets above and below the glass. If aligned with the field, a spin has negative energy; and, if antialigned, positive energy. We can sculpt the field so that it varies across the spin glass. For instance, spin 1 can experience a strong upward-pointing field, while spin 2 experiences a weak downward-pointing field.

Second, say that the spins occupy a fixed-temperature environment, as I occupy a 74-degree-Fahrenheit living room. The spins can exchange heat with the environment. If releasing heat to the environment, a spin flips from having positive energy to having negative—from antialigning with the field to aligning.

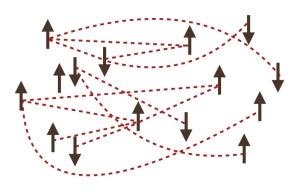

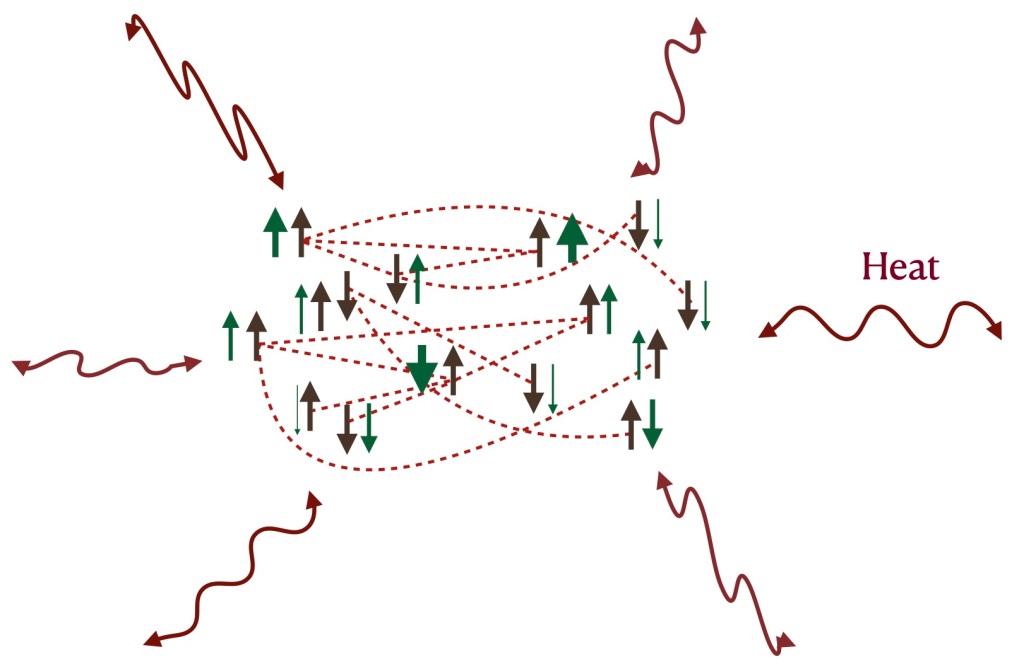

Let’s perform an experiment on the spins. First, we design a magnetic field using random numbers. Whether the field points upward or downward at any given spin is random, as is the strength of the field experienced by each spin. We sculpt three of these random fields and call the trio a drive.

Let’s randomly select a field from the drive and apply it to the spin glass for a while; again, randomly select a field from the drive and apply it; and continue many times. The energy absorbed by the spins from the fields spikes, then declines.

Now, let’s create another drive of three random fields. We’ll randomly pick a field from this drive and apply it; again, randomly pick a field from this drive and apply it; and so on. Again, the energy absorbed by the spins spikes, then tails off.

Here comes the punchline. Let’s return to applying the initial fields. The energy absorbed by the glass will spike—but not as high as before. The glass responds differently to a familiar drive than to a new drive. The spin glass recognizes the original drive—has learned the first fields’ “fingerprint.” This learning happens when the fields push the glass far from equilibrium,1 as I learned when pushed during my mildly hellish autumn.

So spin glasses learn drives that push them far from equilibrium. So do many other simple, classical, many-particle systems: polymers, viscous liquids, crumpled sheets of Mylar, and more. Researchers have predicted such learning and observed it experimentally.

Scientists have detected many-particle learning by measuring thermodynamic observables. Examples include the energy absorbed by the spin glass—what thermodynamicists call work. But thermodynamics developed during the 1800s, to describe equilibrium systems, not to study learning.

One study of learning—the study of machine learning—has boomed over the past two decades. As described by the MIT Technology Review, “[m]achine-learning algorithms use statistics to find patterns in massive amounts of data.” Users don’t tell the algorithms how to find those patterns.

It seems natural and fitting to use machine learning to learn about the learning by many-particle systems. That’s what I did with collaborators from the group of Jeremy England, a GlaxoSmithKline physicist who studies complex behaviors of many particle systems. Weishun Zhong, Jacob Gold, Sarah Marzen, Jeremy, and I published our paper last month.

Using machine learning, we detected and measured many-particle learning more reliably and precisely than thermodynamic measures seem able to. Our technique works on multiple facets of learning, analogous to the intuition and the computational ability I encountered in my computer-science course. We illustrated our technique on a spin glass, but one can apply our approach to other systems, too. I’m exploring such applications with collaborators at the University of Maryland.

The project pushed me far from my equilibrium: I’d never worked with machine learning or many-body learning. But it’s amazing, what we can learn when pushed far from equilibrium. I first encountered this insight sophomore fall of college—and now, we can quantify it better than ever.

1Equilibrium is a quiet, restful state in which the glass’s large-scale properties change little. No net flow of anything—such as heat or particles—enter or leave the system.