I took 32 hours to unravel why Netta Engelhardt’s talk had struck me.

We were participating in Quantum Information in Quantum Gravity III, a workshop hosted by the University of British Columbia (UBC) in Vancouver. Netta studies quantum gravity as a Princeton postdoc. She discussed a feature of black holes—an apparent horizon—I’d not heard of. After hearing of it, I had to grasp it. I peppered Netta with questions three times in the following day. I didn’t understand why, for 32 hours.

After 26 hours, I understood apparent horizons like so.

Imagine standing beside a glass sphere, an empty round shell. Imagine light radiating from a point source in the sphere’s center. Think of the point source as a minuscule flash light. Light rays spill from the point source.

Which paths do the rays follow through space? They fan outward from the sphere’s center, hit the glass, and fan out more. Imagine turning your back to the sphere and looking outward. Light rays diverge as they pass you.

At least, rays diverge in flat space-time. We live in nearly flat space-time. We wouldn’t if we neighbored a supermassive object, like a black hole. Mass curves space-time, as described by Einstein’s theory of general relativity.

Imagine standing beside the sphere near a black hole. Let the sphere have roughly the black hole’s diameter—around 10 kilometers, according to astrophysical observations. You can’t see much of the sphere. So—imagine—you recruit your high-school-physics classmates. You array yourselves around the sphere, planning to observe light and compare observations. Imagine turning your back to the sphere. Light rays would converge, or flow toward each other. You’d know yourself to be far from Kansas.

Picture you, your classmates, and the sphere falling into the black hole. When would everyone agree that the rays switch from diverging to converging? Sometime after you passed the event horizon, the point of no return.1 Before you reached the singularity, the black hole’s center, where space-time warps infinitely. The rays would switch when you reached an in-between region, the apparent horizon.

Imagine pausing at the apparent horizon with your sphere, facing away from the sphere. Light rays would neither diverge nor converge; they’d point straight. Continue toward the singularity, and the rays would converge. Reverse away from the singularity, and the rays would diverge.

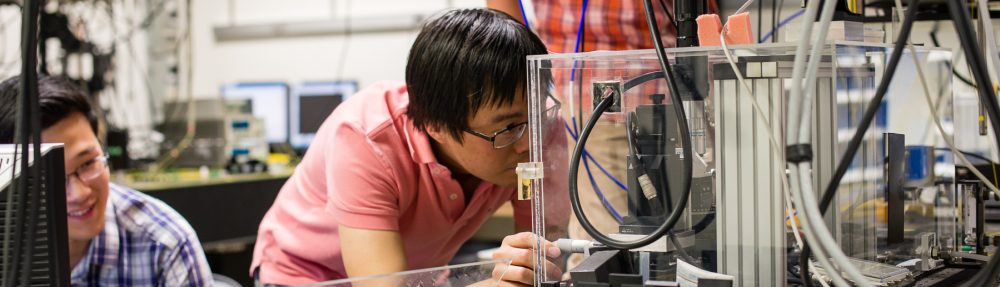

UBC near twilight

Rays diverged from the horizon beyond UBC at twilight. Twilight suits UBC as marble suits the Parthenon; and UBC’s twilight suits musing. You can reflect while gazing on reflections in glass buildings, or reflections in a pool by a rose garden. Your mind can roam as you roam paths lined by elms, oaks, and willows. I wandered while wondering why the sphere intrigued me.

Science thrives on instrumentation. Galileo improved the telescope, which unveiled Jupiter’s moons. Alexander von Humboldt measured temperatures and pressures with thermometers and barometers, charting South America during the 1700s. The Large Hadron Collider revealed the Higgs particle’s mass in 2012.

The sphere reminded me of a thermometer. As thermometers register temperature, so does the sphere register space-time curvature. Not that you’d need a sphere to distinguish a black hole from Kansas. Nor do you need a thermometer to distinguish Vancouver from a Brazilian jungle. But thermometers quantify the distinction. A sphere would sharpen your observations’ precision.

A sphere and a light source—free of supercolliders, superconductors, and superfridges. The instrument boasts not only profundity, but also simplicity.

Alexander von Humboldt

Netta proved a profound theorem about apparent horizons, with coauthor Aron Wall. Jakob Bekenstein and Stephen Hawking had studied event horizons during the 1970s. An event horizon’s area, Bekenstein and Hawking showed, is proportional to the black hole’s thermodynamic entropy. Netta and Aron proved a proportionality between another area and another entropy.

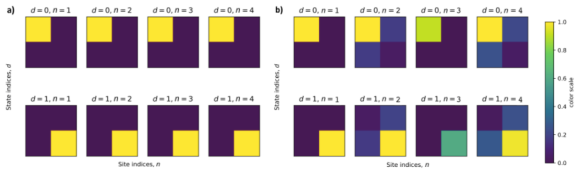

They calculated an apparent horizon’s area, . The math that represents their black hole represents also a quantum system, by a duality called AdS/CFT. The quantum system can occupy any of several states. Different states encode different information about the black hole. Consider the information needed to describe, fully and only, the region outside the apparent horizon. Some quantum state

encodes this information.

encodes no information about the region behind the apparent horizon, closer to the black hole. How would you quantify this lack of information? With the von Neumann entropy

. This entropy is proportional to the apparent horizon’s area:

.

Netta and Aron entitled their paper “Decoding the apparent horizon.” Decoding the apparent horizon’s allure took me 32 hours and took me to an edge of campus. But I didn’t mind. Edges and horizons suited my visit as twilight suits UBC. Where can we learn, if not at edges, as where quantum information meets other fields?

With gratitude to Mark van Raamsdonk and UBC for hosting Quantum Information in Quantum Gravity III; to Mark, the other organizers, and the “It from Qubit” Simons Foundation collaboration for the opportunity to participate; and to Netta Engelhardt for sharing her expertise.

1Nothing that draws closer to a black hole than the event horizon can turn around and leave, according to general relativity. The black hole’s gravity pulls too strongly. Quantum mechanics implies that information leaves, though, in Hawking radiation.