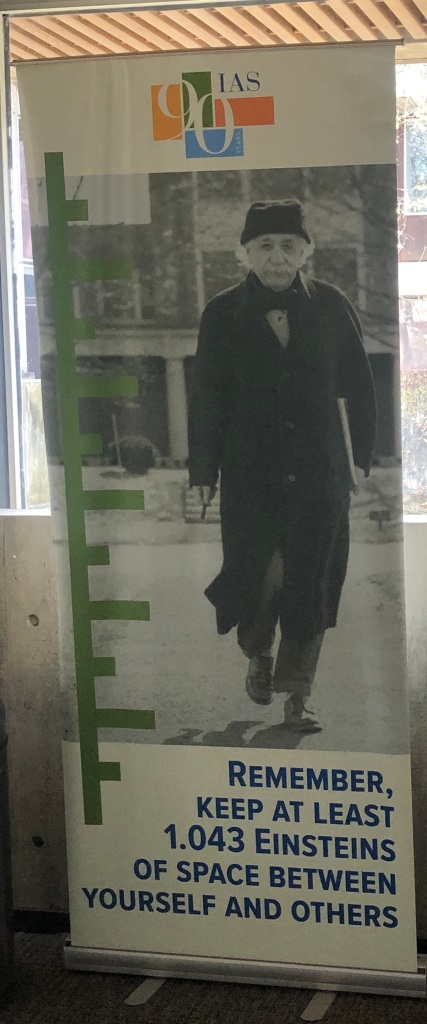

I didn’t fancy the research suggestion emailed by my PhD advisor.

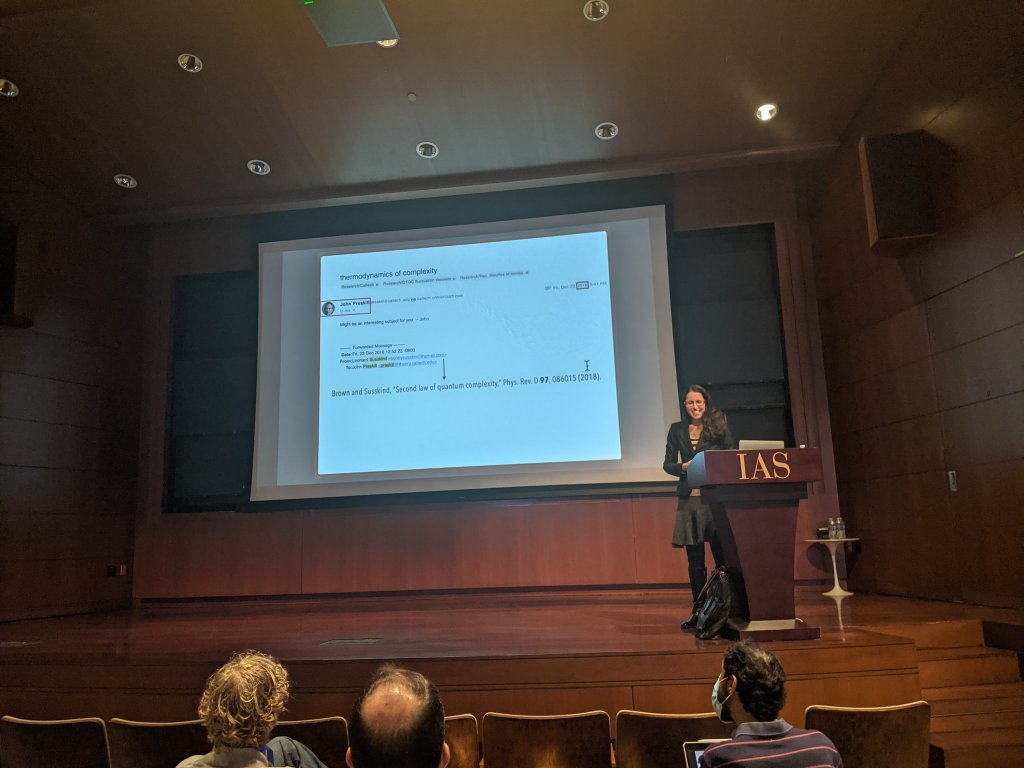

A 2016 email from John Preskill led to my publishing a paper about quantum complexity in 2022, as I explained in last month’s blog post. But I didn’t explain what I thought of his email upon receiving it.

It didn’t float my boat. (Hence my not publishing on it until 2022.)

The suggestion contained ingredients that ordinarily would have caulked any cruise ship of mine: thermodynamics, black-hole-inspired quantum information, and the concept of resources. John had forwarded a paper drafted by Stanford physicists Adam Brown and Lenny Susskind. They act as grand dukes of the community sussing out what happens to information swallowed by black holes.

We’re not sure how black holes work. However, physicists often model a black hole with a clump of particles squeezed close together and so forced to interact with each other strongly. The interactions entangle the particles. The clump’s quantum state—let’s call it —grows not only complicated with time (

), but also complex in a technical sense: Imagine taking a fresh clump of particles and preparing it in the state

via a sequence of basic operations, such as quantum gates performable with a quantum computer. The number of basic operations needed is called the complexity of

. A black hole’s state has a complexity believed to grow in time—and grow and grow and grow—until plateauing.

This growth echoes the second law of thermodynamics, which helps us understand why time flows in only one direction. According to the second law, every closed, isolated system’s entropy grows until plateauing.1 Adam and Lenny drew parallels between the second law and complexity’s growth.

The less complex a quantum state is, the better it can serve as a resource in quantum computations. Recall, as we did last month, performing calculations in math class. You needed clean scratch paper on which to write the calculations. So does a quantum computer. “Scratch paper,” to a quantum computer, consists of qubits—basic units of quantum information, realized in, for example, atoms or ions. The scratch paper is “clean” if the qubits are in a simple, unentangled quantum state—a low-complexity state. A state’s greatest possible complexity, minus the actual complexity, we can call the state’s uncomplexity. Uncomplexity—a quantum state’s blankness—serves as a resource in quantum computation.

Manny Knill and Ray Laflamme realized this point in 1998, while quantifying the “power of one clean qubit.” Lenny arrived at a similar conclusion while reasoning about black holes and firewalls. For an introduction to firewalls, see this blog post by John. Suppose that someone—let’s call her Audrey—falls into a black hole. If it contains a firewall, she’ll burn up. But suppose that someone tosses a qubit into the black hole before Audrey falls. The qubit kicks the firewall farther away from the event horizon, so Audrey will remain safe for longer. Also, the qubit increases the uncomplexity of the black hole’s quantum state. Uncomplexity serves as a resource also to Audrey.

A resource is something that’s scarce, valuable, and useful for accomplishing tasks. Different things qualify as resources in different settings. For instance, imagine wanting to communicate quantum information to a friend securely. Entanglement will serve as a resource. How can we quantify and manipulate entanglement? How much entanglement do we need to perform a given communicational or computational task? Quantum scientists answer such questions with a resource theory, a simple information-theoretic model. Theorists have defined resource theories for entanglement, randomness, and more. In many a blog post, I’ve eulogized resource theories for thermodynamic settings. Can anyone define, Adam and Lenny asked, a resource theory for quantum uncomplexity?

By late 2016, I was a quantum thermodynamicist, I was a resource theorist, and I’d just debuted my first black-hole–inspired quantum information theory. Moreover, I’d coauthored a review about the already-extant resource theory that looked closest to what Adam and Lenny sought. Hence John’s email, I expect. Yet that debut had uncovered reams of questions—questions that, as a budding physicist heady with the discovery of discovery, I could own. Why would I answer a question of someone else’s instead?

So I thanked John, read the paper draft, and pondered it for a few days. Then, I built a research program around my questions and waited for someone else to answer Adam and Lenny.

Three and a half years later, I was still waiting. The notion of uncomplexity as a resource had enchanted the black-hole-information community, so I was preparing a resource-theory talk for a quantum-complexity workshop. The preparations set wheels churning in my mind, and inspiration struck during a long walk.2

After watching my workshop talk, Philippe Faist reached out about collaborating. Philippe is a coauthor, a friend, and a fellow quantum thermodynamicist and resource theorist. Caltech’s influence had sucked him, too, into the black-hole community. We Zoomed throughout the pandemic’s first spring, widening our circle to include Teja Kothakonda, Jonas Haferkamp, and Jens Eisert of Freie University Berlin. Then, Anthony Munson joined from my nascent group in Maryland. Physical Review A published our paper, “Resource theory of quantum uncomplexity,” in January.

The next four paragraphs, I’ve geared toward experts. An agent in the resource theory manipulates a set of qubits. The agent can attempt to perform any gate

on any two qubits. Noise corrupts every real-world gate implementation, though. Hence the agent effects a gate chosen randomly from near

. Such fuzzy gates are free. The agent can’t append or discard any system for free: Appending even a maximally mixed qubit increases the state’s uncomplexity, as Knill and Laflamme showed.

Fuzzy gates’ randomness prevents the agent from mapping complex states to uncomplex states for free (with any considerable probability). Complexity only grows or remains constant under fuzzy operations, under appropriate conditions. This growth echoes the second law of thermodynamics.

We also defined operational tasks—uncomplexity extraction and expenditure analogous to work extraction and expenditure. Then, we bounded the efficiencies with which the agent can perform these tasks. The efficiencies depend on a complexity entropy that we defined—and that’ll star in part trois of this blog-post series.

Now, I want to know what purposes the resource theory of uncomplexity can serve. Can we recast black-hole problems in terms of the resource theory, then leverage resource-theory results to solve the black-hole problem? What about problems in condensed matter? Can our resource theory, which quantifies the difficulty of preparing quantum states, merge with the resource theory of magic, which quantifies that difficulty differently?

I don’t regret having declined my PhD advisor’s recommendation six years ago. Doing so led me to explore probability theory and measurement theory, collaborate with two experimental labs, and write ten papers with 21 coauthors whom I esteem. But I take my hat off to Adam and Lenny for their question. And I remain grateful to the advisor who kept my goals and interests in mind while checking his email. I hope to serve Anthony and his fellow advisees as well.

1…en route to obtaining a marriage license. My husband and I married four months after the pandemic throttled government activities. Hours before the relevant office’s calendar filled up, I scored an appointment to obtain our license. Regarding the metro as off-limits, my then-fiancé and I walked from Cambridge, Massachusetts to downtown Boston for our appointment. I thank him for enduring my requests to stop so that I could write notes.

2At least, in the thermodynamic limit—if the system is infinitely large. If the system is finite-size, its entropy grows on average.